In the Networked Nonprofit “Learning Loops, we illustrated how networked nonprofits do a real-time, lighter assessment process as they engage their community and make improvements and adjustments along the way. Some describe this as “try it and fix it.” It might seem like changing a flat tire while the car is still moving but for many Networked Nonprofits it is a secret to their success.

I remember thinking to myself at the time, well if one networked nonprofit can do this, couldn’t a network of networked nonprofits use real-time collaborative benchmarking data sharing for learning? No one knew what I was talking about. I’m not sure I did, but fast forward two years and it is here.

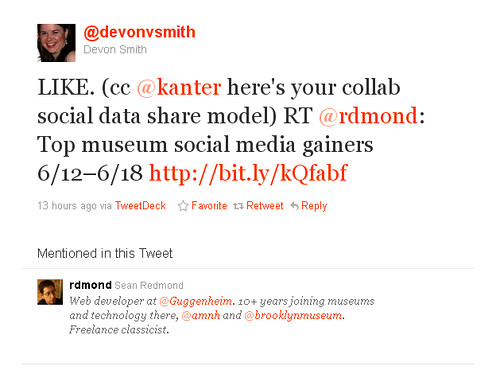

My colleague, Devon Smith, a self-described data nerd who loves benchmarking pointed out this glorious example from the museum world from Sean Redmond, a Web developer at the Guggenheim.

His complete benchmarking list of several hundred museums compares Twitter followers, Facebook Fans, and Klout Score. There is also a top 50 performance list. The project was inspired by Jim Richardson’s awesome spreadsheet but “tricked out with automated data collection social media APIs.” Sean also blogs about social media best practices and standards in social media for museums based on the weekly data.

The original data set was collected in a google spreadsheet, using a manual and time consuming data collection process. To avoid data error and ease the data collection chore, Sean created an automated version with data collected automatically via Twitter and Klout APIs. As Sean points out, Clay Shirky called this is a collaboration problem, the kind of problem that the internet is better at solving than are classical institutions.

With data collection drudgery out of the way, it transforms the benchmarking process into a vehicle for networked learning in real time or learning in public. I didn’t understand it until I read the conversation on Twitter.

Imagine a community of practice of nonprofit organizations working together to learn and improve their tactical execution on social media channels – would something like this inspire group motivation? Certainly, some subtle peer pressure to do your homework.

I shared a link to the site on my Facebook Page with a question about its usefulness (perhaps minus one of the bogus metrics). My long time colleague, Jeff Gates, an artist and museum professional said:

I wonder about this emphasis on number of followers, change in percentage of followers of this list rather than focusing on strategies for engagement. Number of followers has become a benchmark stat like the number of hits on our web pages used to be. And it’s not very useful.

If this leads to a discussion about strategies for success (and what success is) that’s fine. But, by itself, it’s not of great value to me.

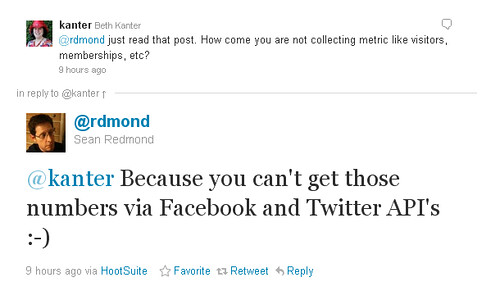

I asked Sean on Twitter why they didn’t collect metrics like membership numbers or visitors to the physical building? Jokingly, he said, “There isn’t an API for that.” But followed up with “Picking low-hanging fruit lets better fruit fall.” Does tracking metrics like number of followers as part of a grid of well selected metrics without creating meaningless cause and effect assumptions help us improve practice and document results? Here’s a sneak peek at benchmarking spreadsheet that is doing this for an integrated campaign.

Documenting value and finding meaning are important commodities in new systems of engagement. But in the real life of many institutions, there is a propensity for senior managers to latch onto stats like number of followers on Facebook. Do you collect metrics to impress your boss or ones that truly help you improve?

What would be the valid benchmarking list for philanthropy? What if the glass pockets data benchmarked a transparency metric?

Are you benchmarking your organization’s social media or integrated communications campaigns against peer organizations? Have you conducted a benchmarking study of best practices? Let me know in the comments!

Update: Aquariums and Zoos Benchmark

https://plus.google.com/113352607123727266547/posts/gSwE8KNwatE

Leave a Reply